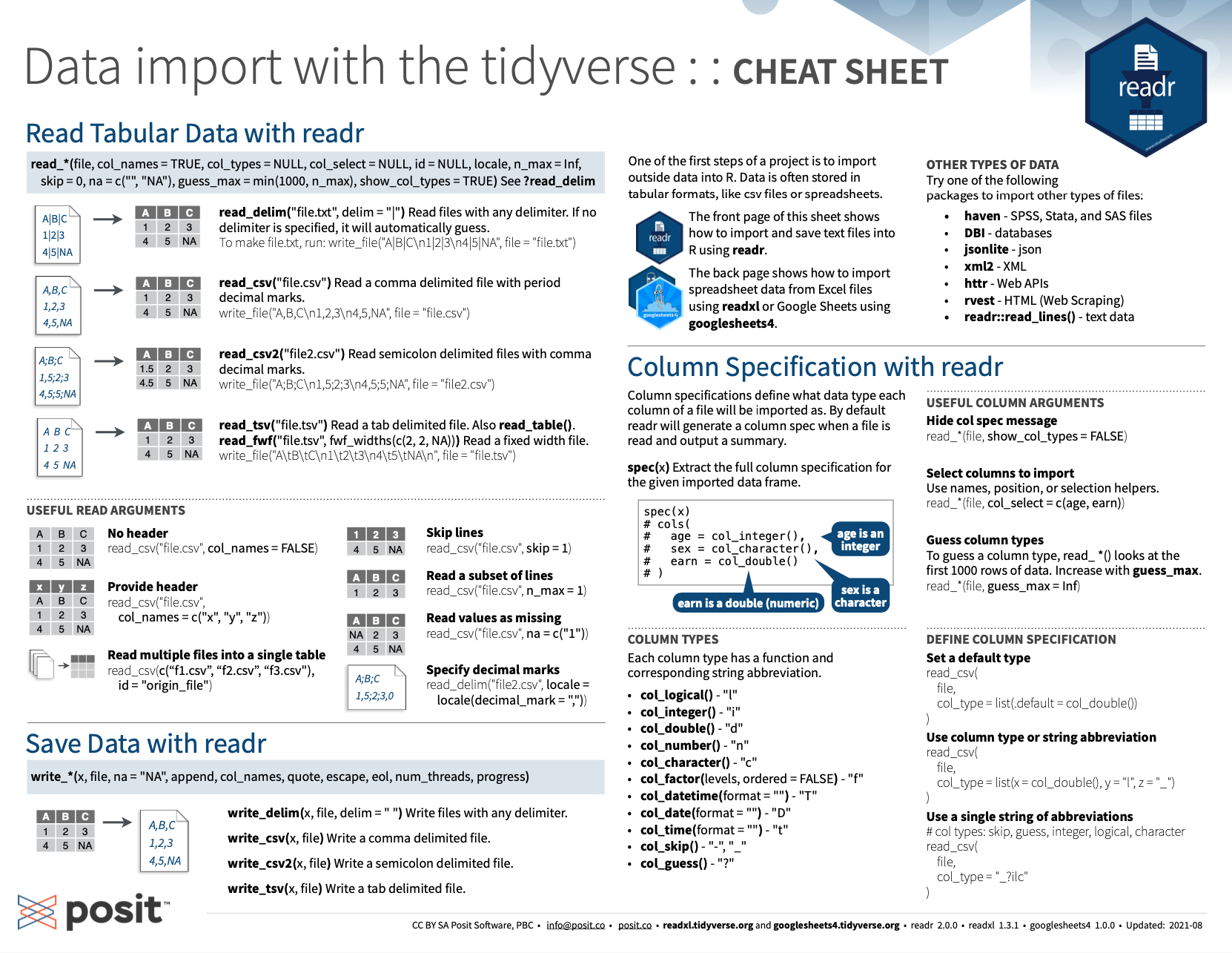

Variable: A quantity, quality, or property that you can measure.; Observation: A set of values that display the relationship between variables.To be an observation, values need to be measured under similar conditions, usually measured on the same observational unit at the same time. Package ‘tidyr’ March 3, 2021 Title Tidy Messy Data Version 1.1.3 Description Tools to help to create tidy data, where each column is a variable, each row is an observation, and each cell contains a single value. 'tidyr' contains tools for changing the shape (pivoting) and hierarchy (nesting and 'unnesting') of a dataset. Tidyverse Cheat Sheet For Beginners This tidyverse cheat sheet will guide you through the basics of the tidyverse, and 2 of its core packages: dplyr and ggplot2! The tidyverse is a powerful collection of R packages that you can use for data science. They are designed to help you to transform and visualize data. Data Import with readr, tidyr, and tibble Cheat Sheet. Machine learning Cheat Sheet. Hadoop for Dummies Cheat Sheet. Scala Cheat Sheet. The front side of this sheet shows how to read text files into R with readr. The reverse side shows how to create tibbles with tibble and to layout tidy data with tidyr. Save Data Data Import:: CHEAT SHEET Read Tabular Data - These functions share the common arguments: Data types USEFUL ARGUMENTS OTHER TYPES OF DATA Comma delimited file.

Complete List of Cheat Sheets and Infographics for Artificial intelligence (AI), Neural Networks, Machine Learning, Deep Learning and Big Data.

Content Summary

Neural Networks

Neural Networks Graphs

Machine Learning Overview

Machine Learning: Scikit-learn algorithm

Scikit-Learn

Machine Learning: Algorithm Cheat Sheet

Python for Data Science

TensorFlow

Keras

Numpy

Pandas

Data Wrangling

Data Wrangling with dplyr and tidyr

Scipy

Matplotlib

Data Visualization

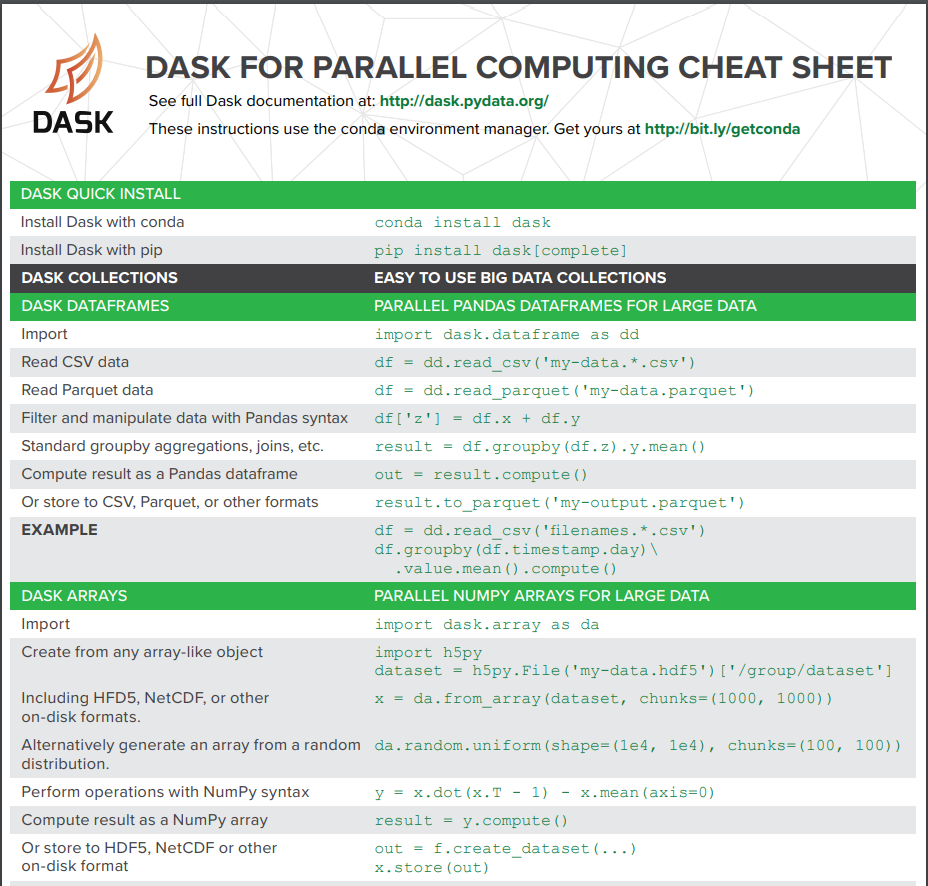

PySpark

Big-O

Resources

Neural Networks

Artificial neural networks (ANN) or connectionist systems are computing systems vaguely inspired by the biological neural networks that constitute animal brains. The neural network itself is not an algorithm, but rather a framework for many different machine learning algorithms to work together and process complex data inputs. Such systems “learn” to perform tasks by considering examples, generally without being programmed with any task-specific rules.

Neural Networks Graphs

Graph Neural Networks (GNNs) for representation learning of graphs broadly follow a neighborhood aggregation framework, where the representation vector of a node is computed by recursively aggregating and transforming feature vectors of its neighboring nodes. Many GNN variants have been proposed and have achieved state-of-the-art results on both node and graph classification tasks.

Machine Learning Overview

Machine learning (ML) is the scientific study of algorithms and statistical models that computer systems use to progressively improve their performance on a specific task. Machine learning algorithms build a mathematical model of sample data, known as “training data”, in order to make predictions or decisions without being explicitly programmed to perform the task. Machine learning algorithms are used in the applications of email filtering, detection of network intruders, and computer vision, where it is infeasible to develop an algorithm of specific instructions for performing the task.

Machine Learning: Scikit-learn algorithm

This machine learning cheat sheet will help you find the right estimator for the job which is the most difficult part. The flowchart will help you check the documentation and rough guide of each estimator that will help you to know more about the problems and how to solve it.

Scikit-Learn

Scikit-learn (formerly scikits.learn) is a free software machine learning library for the Python programming language. It features various classification, regression and clustering algorithms including support vector machines, random forests, gradient boosting, k-means and DBSCAN, and is designed to interoperate with the Python numerical and scientific libraries NumPy and SciPy.

Machine Learning: Algorithm Cheat Sheet

This machine learning cheat sheet from Microsoft Azure will help you choose the appropriate machine learning algorithms for your predictive analytics solution. First, the cheat sheet will asks you about the data nature and then suggests the best algorithm for the job.

Python for Data Science

TensorFlow

In May 2017 Google announced the second-generation of the TPU, as well as the availability of the TPUs in Google Compute Engine. The second-generation TPUs deliver up to 180 teraflops of performance, and when organized into clusters of 64 TPUs provide up to 11.5 petaflops.

Keras

In 2017, Google’s TensorFlow team decided to support Keras in TensorFlow’s core library. Chollet explained that Keras was conceived to be an interface rather than an end-to-end machine-learning framework. It presents a higher-level, more intuitive set of abstractions that make it easy to configure neural networks regardless of the backend scientific computing library.

Numpy

NumPy targets the CPython reference implementation of Python, which is a non-optimizing bytecode interpreter. Mathematical algorithms written for this version of Python often run much slower than compiled equivalents. NumPy address the slowness problem partly by providing multidimensional arrays and functions and operators that operate efficiently on arrays, requiring rewriting some code, mostly inner loops using NumPy.

Pandas

The name ‘Pandas’ is derived from the term “panel data”, an econometrics term for multidimensional structured data sets.

Data Wrangling

The term “data wrangler” is starting to infiltrate pop culture. In the 2017 movie Kong: Skull Island, one of the characters, played by actor Marc Evan Jackson is introduced as “Steve Woodward, our data wrangler”.

Data Wrangling with dplyr and tidyr

Scipy

SciPy builds on the NumPy array object and is part of the NumPy stack which includes tools like Matplotlib, pandas and SymPy, and an expanding set of scientific computing libraries. This NumPy stack has similar users to other applications such as MATLAB, GNU Octave, and Scilab. The NumPy stack is also sometimes referred to as the SciPy stack.

Matplotlib

matplotlib is a plotting library for the Python programming language and its numerical mathematics extension NumPy. It provides an object-oriented API for embedding plots into applications using general-purpose GUI toolkits like Tkinter, wxPython, Qt, or GTK+. There is also a procedural “pylab” interface based on a state machine (like OpenGL), designed to closely resemble that of MATLAB, though its use is discouraged. SciPy makes use of matplotlib. pyplot is a matplotlib module which provides a MATLAB-like interface. matplotlib is designed to be as usable as MATLAB, with the ability to use Python, with the advantage that it is free.

Data Visualization

PySpark

Big-O

Big O notation is a mathematical notation that describes the limiting behavior of a function when the argument tends towards a particular value or infinity. It is a member of a family of notations invented by Paul Bachmann, Edmund Landau and others, collectively called Bachmann–Landau notation or asymptotic notation.

Resources

Big-O Algorithm Cheat Sheet

Bokeh Cheat Sheet

Data Science Cheat Sheet

Data Wrangling Cheat Sheet

Data Wrangling

Ggplot Cheat Sheet

Keras Cheat Sheet

Keras

Machine Learning Cheat Sheet

Machine Learning Cheat Sheet

ML Cheat Sheet

Matplotlib Cheat Sheet

Matpotlib

Neural Networks Cheat Sheet

Neural Networks Graph Cheat Sheet

Neural Networks

Numpy Cheat Sheet

NumPy

Pandas Cheat Sheet

Pandas

Pandas Cheat Sheet

Pyspark Cheat Sheet

Scikit Cheat Sheet

Scikit-learn

Scikit-learn Cheat Sheet

Scipy Cheat Sheet

SciPy

TesorFlow Cheat Sheet

Tensor Flow

Course Duck > The World’s Best Machine Learning Courses & Tutorials in 2020

Tag: Machine Learning, Deep Learning, Artificial Intelligence, Neural Networks, Big Data

Related posts:

“Tidy datasets are all alike, but every messy dataset is messy in its own way.” - Hadley Wickham

The notes below are modified from the excellent Dataframe Manipulation and freely available on the Software Carpentry website.

Load the libraries:

In this section, we will learn a consistent way to organize the data in R, called tidy data. Getting the data into this format requires some upfront work, but that work pays off in the long term. Once you have tidy data and the tools provided by the tidyr, dplyr and ggplot2 packages, you will spend much less time munging/wrangling data from one representation to another, allowing you to spend more time on the analytic questions at hand.

Tibble

For now, we will use tibble instead of R’s traditional data.frame. Tibble is a data frame, but they tweak some older behaviors to make life a little easier.

Let’s use Keeling_Data as an example:

You can coerce a data.frame to a tibble using the as_tibble() function:

Tidy dataset

There are three interrelated rules which make a dataset tidy:

- Each variable must have its own column;

- Each observation must have its own row;

- Each value must have its own cell;

These three rules are interrelated because it’s impossible to only satisfy two of the three. That interrelationship leads to an even simpler set of practical instructions:

- Put each dataset in a tibble

- Put each variable in a column

Why ensure that your data is tidy? There are two main advantages:

There’s a general advantage to picking one consistent way of storing data. If you have a consistent data structure, it’s easier to learn the tools that work with it because they have an underlying uniformity. If you ensure that your data is tidy, you’ll spend less time fighting with the tools and more time working on your analysis.

There’s a specific advantage to placing variables in columns because it allows R’s vectorized nature to shine. That makes transforming tidy data feel particularly natural.

tidyr,dplyr, andggplot2are designed to work with tidy data.

The dplyr package provides a number of very useful functions for manipulating dataframes in a way that will reduce the self-repetition, reduce the probability of making errors, and probably even save you some typing. As an added bonus, you might even find the dplyr grammar easier to read.

Here we’re going to cover commonly used functions as well as using pipes %>% to combine them.

Using select()

Use the select() function to keep only the variables (cplumns) you select.

The pipe symbol %>%

Above we used ‘normal’ grammar, but the strengths of dplyr and tidyr lie in combining several functions using pipes. Since the pipes grammar is unlike anything we’ve seen in R before, let’s repeat what we’ve done above using pipes.

x %>% f(y) is the same as f(x, y)

The above lines mean we first call the Keeling_Data_tbl tibble and pass it on, using the pipe symbol%>%, to the next step, which is the select() function. In this case, we don’t specify which data object we use in the select() function since in gets that from the previous pipe. The select() function then takes what it gets from the pipe, in this case the Keeling_Data_tbl tibble, as its first argument. By using pipe, we can take output of the previous step as input for the next one, so that we can avoid defining and calling unnecessary temporary variables. You will start to see the power of pipe later.

Using filter()

Use filter() to get values (rows):

If we now want to move forward with the above tibble, but only with quality 1 , we can combine select() and filter() functions:

You see here we have used the pipe twice, and the scripts become really clean and easy to follow.

Using group_by() and summarize()

Now try to ‘group’ monthly data using the group_by() function, notice how the ouput tibble changes:

The group_by() function is much more exciting in conjunction with the summarize() function. This will allow us to create new variable(s) by using functions that repeat for each of the continent-specific data frames. That is to say, using the group_by() function, we split our original dataframe into multiple pieces, then we can run functions (e.g., mean() or sd()) within summarize().

Here we create a new variable (column) monthly_mean, and append it to the groups (month in this case). Now, we get a so-called monthly climatology.

You can also use arrange() and desc() to sort the data:

Let’s add more statistics to the monthly climatology:

Here we call the n() to get the size of a vector.

Using mutate()

We can also create new variables (columns) using the mutate() function. Here we create a new column co2_ppb by simply scaling co2 by a factor of 1000.

When creating new variables, we can hook this with a logical condition. A simple combination of mutate() and ifelse() facilitates filtering right where it is needed: in the moment of creating something new. This easy-to-read statement is a fast and powerful way of discarding certain data or for updating values depending on this given condition.

Let’s create a new variable co2_new, it is equal to co2 when quality1, otherwise it’s NA:

Combining dplyr and ggplot2

Just as we used %>% to pipe data along a chain of dplyr functions we can use it to pass data to ggplot(). Because %>% replaces the first argument in a function we don’t need to specify the data = argument in the ggplot() function.

By combining dplyr and ggplot2 functions, we can make figures without creating any new variables or modifying the data.

As you will see, this is much easier than what we have done in Section 3. This is the power of a tidy dataset and its related functions!

Let’s plot CO2 of the same month as a function of decimal_date, with the color option:

Or plot the same data but in panels (facets), with the facet_wrap function:

The ‘long’ layout or format is where:

- each column is a variable

- each row is an observation

For the ‘wide’ format, each row is often a site/subject/patient and you have multiple observation variables containing the same type of data. These can be either repeated observations over time, or observation of multiple variables (or a mix of both).

You may find data input may be simpler, or some other applications may prefer the ‘wide’ format. However, many of R’s functions have been designed assuming you have ‘longer’ formatted data.

Here we use tidyr package to efficiently transform the data shape regardless of the original format.

‘Long’ and ‘wide’ layouts mainly affect readability. For humans, the ‘wide’ format is often more intuitive since we can often see more of the data on the screen due to its shape. However, the ‘long’ format is more machine-readable and is closer to the formatting of databases.

Long to wide format with spread()

Spread rows into columns:

Wide to long format with gather()

Gather columns into rows:

Exercise #1

Please go over the notes once again, make sure you understand the scripts.

Exercise #2

Using the tools/functions covered in this section, to solve More about Mauna Loa CO2 in Lab 01.

R Dataframe Cheat Sheet

- R for Data Science, see chapter 10 and 12.

- Data wrangling with R, with a video